Live Performances with Digital Humans: What You Need to Plan

- Mimic Productions

- 12 minutes ago

- 11 min read

Live Performances with Digital Humans are no longer side experiments at festivals. They are full productions that combine 3D character creation, motion capture, real time rendering, show control, audio, and lighting into a single system that has to work on cue, in front of an audience.

When this works, the crowd does not talk about technology. They talk about presence. They feel that a virtual performer is sharing the room with them, whether it appears on an LED wall, as a holographic projection, or inside a club environment.

Studios with a dedicated digital hologram studio in Berlin and real time character pipelines have taken techniques from film and games and adapted them for concerts, museum shows, and club performances. The practical lesson is simple. If you plan the pipeline with the same care as the creative, Live Performances with Digital Humans can be as immediate and emotional as any physical act on stage.

Table of Contents

Understanding live digital human performances

A live digital human performance sits at the intersection of three disciplines.

First, there is the character itself. A production ready 3D human or avatar with a clean rig, believable shaders, and wardrobe that can hold up at stage resolution. Studios that build photo realistic 3D character models for film and games use the same foundations here.

Second, there is the performance layer. This can be live motion capture, pre captured performance, hand animation, or a controlled mix. On a club project such as the virtual human performance for DJ Yellock in Tokyo, the live energy depends on how fast and accurately that performance layer drives the digital double.

Third, there is the delivery system. The digital human is rendered in a real time engine and sent to an LED wall, a holographic unit, projection, or broadcast pipeline. This is where it meets the venue.

Live Performances with Digital Humans can be completely live, completely pre rendered, or a hybrid. The planning changes with each option, but the core idea is the same. You are building a performer that exists in software but must behave like a reliable member of the cast.

Strategic decisions before you start

Before any scanning or mocap, align on a few decisions that will shape the entire show.

Decide what kind of presence you want. A digital double that matches the artist exactly will demand more detailed capture, approvals, and facial rigging than a stylised virtual persona. A recreated historical figure raises its own ethical and technical questions.

Define how live the show must be. A fully live act driven by the performer requires a robust control system and a generous rehearsal schedule. A mostly pre rendered show with a live segment in the middle focuses more effort on animation and look development. Live Performances with Digital Humans often land somewhere in between these extremes.

Clarify distribution. A single museum installation, a touring arena act, and a one night club performance all call for different levels of robustness, redundancy, and crew training. This is also the right moment to involve a real time integration team that understands how engines, media servers, and show control talk to each other. Early technical planning saves time and cost later.

Building the digital performer

The digital human is the anchor of the show. If the model, textures, or rig are weak, every rehearsal will expose those weaknesses.

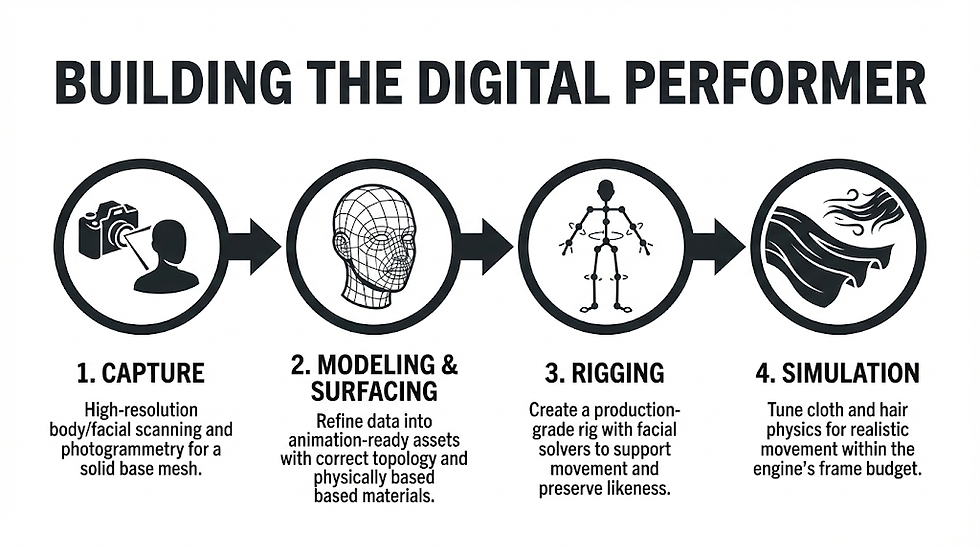

The process usually begins with capture. High resolution body scanning or controlled photogrammetry provides a solid base mesh. Detailed facial reference sessions capture expression range, skin detail, and eye behaviour. Wardrobe reference and cloth tests inform later simulation choices.

Modeling and surfacing teams then refine this data into animation ready assets. Correct topology allows the body and face to deform without visible artefacts. Skin, eyes, hair, and clothing are shaded with physically based materials that can be translated from offline look development into a real time engine.

Rigging transforms this static mesh into a performer. A production grade body and facial rig supports everything from broad stage gestures to subtle expression work. Corrective shapes, muscle systems, and facial solvers help preserve likeness when the character moves. When a studio offers end to end 3D character services, this rigging work is tightly aligned with both capture and rendering.

Finally, cloth and hair simulation are tuned with live use in mind. The character has to look good, but it also has to run within the frame budget of the chosen engine while it shares resources with lighting, effects, and stage content.

Control systems and motion capture for the show

Once the digital performer exists, you need to decide how it will move on the night.

For many projects, full body motion capture drives the character in real time. The artist wears a suit and possibly facial markers, and their movements are retargeted onto the digital double with minimal delay. A partner with dedicated motion capture services will also design the capture volume so that it fits the physical constraints of the venue.

Other shows use a mix. Body performance may be pre captured in a controlled session while facial performance is captured live, allowing the performer to respond to the crowd while the large scale choreography remains fixed. In some formats, the character is entirely keyframed and only camera and framing are controlled live.

The DJ Yellock performance in Tokyo is a useful example. The brief was to create a virtual human that felt spontaneous in a club environment, with low latency response to music and crowd interaction. That required careful optimisation of the retargeting stack, cloth behaviour, and shading so that visual fidelity did not compromise responsiveness.

For Live Performances with Digital Humans, latency is not just a technical number. It is part of the audience experience. If the digital body lags behind the music or the voice, the illusion breaks.

Real time engine and venue integration

A live digital character spends its performance inside a real time engine scene. The quality and stability of that scene depend on how well it has been integrated with the rest of the show.

Practically, you are answering a few questions.

Which outputs are required. An LED wall behind the stage, a side screen for close ups, a holographic stage element, or a feed for broadcast. Each output has its own resolution, frame rate, colour pipeline, and hardware.

How the system is structured. Most serious productions run a dedicated render machine for the character and one or more backup machines configured for fast failover. Network paths carry mocap data, timecode, and show control cues between departments. A specialist team in real time integration will define these paths, test them under load, and document recovery procedures.

How much delay is acceptable. A museum narration can tolerate more latency than an improvised club set. The pipeline must be tuned so that the total delay from performer to screen remains inside that budget, including capture, retargeting, rendering, and output.

When holographic units are involved, the digital hologram studio in Berlin coordinates closely with the hardware provider. Camera angles, safe zones, and reflections are tested long before the first audience arrives.

Creative direction and rehearsal workflow

Technology alone does not make a performance memorable. The digital human needs direction just as any actor would.

Creative discussions should define the character’s role. Are they the main act, a host, a guide, or a counterpart to a human performer. The decisions here will inform posture, timing, and how much the character speaks or stays silent.

Rehearsals are where Live Performances with Digital Humans become real. You are rehearsing not just a person, but a network of systems. Building time for three kinds of rehearsal tends to pay off.

Technical rehearsals test capture, engine scenes, outputs, and show control without focusing on performance quality. Character rehearsals allow the performer to explore physicality inside the digital body, finding gestures that feel truthful on screen. Full dress runs bring lighting, sound, and audience stand ins together so that the entire system can be tuned at show scale.

Recording these sessions from the engine’s point of view allows rigging, animation, and lighting teams to refine the character between runs. Small adjustments to eye focus, cloth settings, or camera lenses can significantly change how present the character feels in the room.

Consent, likeness rights, and ethics

Any production that uses a human likeness in digital form carries a responsibility to treat that likeness with care. For a live show, the digital human may continue to perform in venues where the original person is not physically present.

Responsible planning includes clear written agreements that cover scanning, ongoing use of the likeness, and potential reuse of the character in future projects. These agreements should spell out approval processes for new uses and what happens at the end of the contract.

If a performance involves a deceased figure or a reconstruction built from archive material, transparency with estates and audiences matters. The distinction between consented digital humans and unauthorised manipulation is important both ethically and legally.

Studios that work routinely with digital doubles, holograms, and ai avatar and conversational character work typically maintain internal guidelines around consent and audience communication. Bringing those expectations into the conversation early avoids difficult discussions later in the schedule.

Budgeting, scheduling, and risk management

From a production standpoint, Live Performances with Digital Humans feel more like combining a film shoot with a tour than commissioning a single piece of content.

Budgets must account for character creation, rigging, look development, motion capture sessions, engine integration, rehearsals, and on site support. Hardware and services for hologram units, LED walls, or media servers sit alongside that. When the same company handles 3D character services, motion capture, and real time integration, handoff costs between departments are reduced.

Scheduling should allow for iterative loops. The more detailed the character and the closer the cameras, the more iteration you will need to reach stable, believable behaviour. Compressing these loops into a short window increases the risk of visible artefacts in the final show.

Risk management focuses on two practical questions.

What happens if a system fails during the show. Answering this means planning backup render machines, fallback content, and clear procedures for operators.

What happens if the performer cannot be present. In some cases, recorded mocap can stand in. In others, an alternate performer can drive the digital body, provided contracts and rehearsal time allow for it.

Comparison table

Aspect | Pre rendered digital human show | Fully live controlled digital human show |

Production effort | Heavy upfront animation, look development, rendering, and review | More effort in capture systems, rig responsiveness, and show control, less offline rendering |

Creative flexibility during the show | Fixed sequences, identical performance each time | Improvisation, audience interaction, pacing changes in real time |

Technical risk profile | Lower live risk once finalized, depends mainly on playback stability | Higher live risk, more moving parts, needs redundancy and skilled operators |

Audience perception | Extremely polished, cinematic quality | Feels more alive and present, especially in intimate settings |

Applications

Stage ready digital humans are now appearing in many areas.

In music and nightlife, virtual performers extend how an artist can appear. A photorealistic digital double can headline a club night or festival while the real person remains elsewhere. A stylised persona can host a live stream and an in venue show with the same identity.

In brand and product experiences, virtual ambassadors introduce products, guide guests through installations, and appear consistently across events, broadcast spots, and interactive platforms. When these characters are built on the same foundations as film ready digital humans, they feel grounded rather than gimmicky.

Museums and cultural institutions use digital figures to embody historical voices and bring archive material into contemporary spaces. Here, the balance between accuracy, interpretation, and respect is crucial.

Sports, fashion, and immersive training also draw on these techniques. Digital twins of athletes support analysis and fan engagement on large screens. Virtual models walk runways that could not exist in the physical world. Training scenarios place realistic digital humans into simulations where stakes are high and repetition matters.

In all of these cases, the underlying craft is consistent. Robust character assets, reliable mocap or animation pipelines, and a real time engine scene that behaves predictably in front of an audience.

Benefits

When planned and executed with care, Live Performances with Digital Humans offer more than a novelty moment.

They extend presence. An artist can maintain a global touring presence through digital shows while limiting physical travel. A historical figure can speak to a contemporary audience in a way that feels immediate and personal.

They expand creative vocabulary. Digital bodies can perform moves, transformations, and environment changes that no physical stage could support safely or practically. The camera can move from a close up to an impossible aerial shot in a single beat without breaking continuity.

They provide continuity for brands and franchises. A well designed digital character can appear in film, games, interactive experiences, and live events without losing identity. Changes in wardrobe, styling, or even age can be handled inside the same asset.

Finally, they offer new forms of interaction. Combined with conversational systems and sensory input from the venue, a virtual host can address individual audience members, react to crowd energy, and adapt a script in real time.

Future outlook

As real time engines mature and capture hardware becomes more compact, the barrier to staging Live Performances with Digital Humans will continue to fall. Smaller venues will experiment with virtual hosts and performers, while large scale productions will push toward more complex interaction and narrative.

We can expect more hybrid shows where a physical performer shares the stage with their digital counterpart, sometimes handing control back and forth within a single set. Audience tools such as mobile devices, wearables, or spatial computing headsets will give spectators new ways to influence what the digital character does.

At the same time, industry norms around consent, credit, and reuse of digital likenesses will become more formal. Teams that already integrate ethical practice into their pipelines will be well placed as those standards solidify.

Frequently asked questions

How early should we start planning a show with a digital human?

For a new photoreal digital double, a planning window of several months is realistic. That time covers asset creation, motion capture, engine integration, and rehearsals. If you already have a finished character, the schedule can shorten, but live testing still takes time.

Yes, provided contracts and approvals allow it. Another performer can drive the digital double, or pre recorded performance can be used. The crucial point is that this possibility is discussed openly with the artist and codified in the agreement.

Do we always need full body motion capture?

Not necessarily. Some shows focus on upper body performance only. Others rely on pre animated body motion and live facial capture. The right choice depends on stage layout, camera framing, and how physical the performance needs to feel.

How reliable is a live digital human in a club or arena environment?

With a robust design, experienced operators, and clear backup plans, a virtual performer can be as reliable as a complex lighting or media server rig. The key is to treat it as core infrastructure rather than an add on.

What is the difference between a digital double and an ai driven avatar in this context?

A digital double is a faithful recreation of a specific person, usually driven by that person or a chosen performer. An ai driven avatar may be an original character, designed to respond to input and handle conversation. Both can appear on stage, but they carry different expectations around likeness, identity, and behaviour.

Conclusion

Live Performances with Digital Humans represent the point where character work, real time technology, and stage craft truly converge. When planned with care, they add a new dimension to concerts, cultural experiences, and brand events without replacing the value of human performers.

The practical work is detailed. It spans scanning, modeling, rigging, performance capture, engine integration, show control, and on site operation. It also spans contracts, consent, and ethical choices about how a likeness is used over time.

Studios that live inside this intersection, with teams for digital holograms, high fidelity character creation, motion capture, and real time integration under one roof, can guide productions through that complexity. The result is a virtual performer that feels present, responsive, and grounded, whether it is filling an arena, guiding visitors, or transforming a night in a club.

For inquiries, please contact: Press Department, Mimic Productions info@mimicproductions.com

.png)

Comments